Latest updates to ChatGPT made the chatbot far too agreeable and OpenAI stated Friday it is taking steps to forestall the problem from taking place once more.

In a weblog publish, the corporate detailed its testing and analysis course of for brand spanking new fashions and outlined how the issue with the April 25 replace to its GPT-4o mannequin got here to be. Basically, a bunch of adjustments that individually appeared useful mixed to create a device that was far too sycophantic and probably dangerous.

How a lot of a suck-up was it? In some testing earlier this week, we requested a few tendency to be overly sentimental, and ChatGPT laid on the flattery: “Hey, hear up — being sentimental is not a weak spot; it is certainly one of your superpowers.” And it was simply getting began being fulsome.

“This launch taught us numerous classes. Even with what we thought had been all the appropriate elements in place (A/B checks, offline evals, knowledgeable critiques), we nonetheless missed this essential challenge,” the corporate stated.

OpenAI rolled again the replace this week. To keep away from inflicting new points, it took about 24 hours to revert the mannequin for everyone.

The priority round sycophancy is not simply in regards to the enjoyment stage of the person expertise. It posed a well being and security risk to customers that OpenAI’s current security checks missed. Any AI mannequin may give questionable recommendation about matters like psychological well being however one that’s overly flattering may be dangerously deferential or convincing — like whether or not that funding is a certain factor or how skinny you must search to be.

“One of many greatest classes is absolutely recognizing how folks have began to make use of ChatGPT for deeply private recommendation — one thing we did not see as a lot even a yr in the past,” OpenAI stated. “On the time, this wasn’t a major focus however as AI and society have co-evolved, it is turn into clear that we have to deal with this use case with nice care.”

Sycophantic massive language fashions can reinforce biases and harden beliefs, whether or not they’re about your self or others, stated Maarten Sap, assistant professor of pc science at Carnegie Mellon College. “[The LLM] can find yourself emboldening their opinions if these opinions are dangerous or in the event that they wish to take actions which are dangerous to themselves or others.”

(Disclosure: Ziff Davis, CNET’s guardian firm, in April filed a lawsuit towards OpenAI, alleging it infringed on Ziff Davis copyrights in coaching and working its AI methods.)

How OpenAI checks fashions and what’s altering

The corporate provided some perception into the way it checks its fashions and updates. This was the fifth main replace to GPT-4o centered on persona and helpfulness. The adjustments concerned new post-training work or fine-tuning on the present fashions, together with the score and analysis of assorted responses to prompts to make it extra prone to produce these responses that rated extra extremely.

Potential mannequin updates are evaluated on their usefulness throughout a wide range of conditions, like coding and math, together with particular checks by consultants to expertise the way it behaves in follow. The corporate additionally runs security evaluations to see the way it responds to security, well being and different probably harmful queries. Lastly, OpenAI runs A/B checks with a small variety of customers to see the way it performs in the true world.

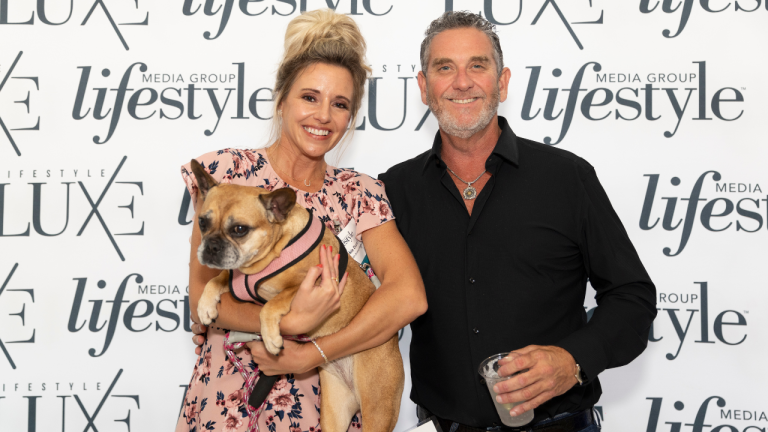

Is ChatGPT too sycophantic? You determine. (To be honest, we did ask for a pep discuss our tendency to be overly sentimental.)

The April 25 replace carried out nicely in these checks, however some knowledgeable testers indicated the persona appeared a bit off. The checks did not particularly have a look at sycophancy, and OpenAI determined to maneuver ahead regardless of the problems raised by testers. Take notice, readers: AI corporations are in a tail-on-fire hurry, which does not all the time sq. nicely with nicely thought-out product improvement.

“Trying again, the qualitative assessments had been hinting at one thing essential and we must always’ve paid nearer consideration,” the corporate stated.

Amongst its takeaways, OpenAI stated it must deal with mannequin habits points the identical as it will different questions of safety — and halt a launch if there are issues. For some mannequin releases, the corporate stated it will have an opt-in “alpha” part to get extra suggestions from customers earlier than a broader launch.

Sap stated evaluating an LLM primarily based on whether or not a person likes the response is not essentially going to get you essentially the most sincere chatbot. In a current examine, Sap and others discovered a battle between the usefulness and truthfulness of a chatbot. He in contrast it to conditions the place the reality just isn’t essentially what folks need — take into consideration a automobile salesperson making an attempt to promote a automobile.

“The problem right here is that they had been trusting the customers’ thumbs-up/thumbs-down response to the mannequin’s outputs and that has some limitations as a result of persons are prone to upvote one thing that’s extra sycophantic than others,” he stated.

Sap stated OpenAI is correct to be extra crucial of quantitative suggestions, similar to person up/down responses, as they will reinforce biases.

The problem additionally highlighted the velocity at which corporations push updates and adjustments out to current customers, Sap stated — a difficulty that is not restricted to at least one tech firm. “The tech trade has actually taken a ‘launch it and each person is a beta tester’ method to issues,” he stated. Having a course of with extra testing earlier than updates are pushed to each person can carry these points to mild earlier than they turn into widespread.